GNNs + Knowledge Graphs

The Power of Pattern Recognition and the Butterfly Effect in AI

Pattern recognition has always been humanity’s quiet superpower. For early humans, this ability meant survival—identifying which plants were safe to eat, which skies signaled rain, or where predators might lurk. Today, while our survival no longer depends on recognizing patterns in the wild, the skill remains indispensable. Entrepreneurs, scientists, and innovators rely on it to navigate complex challenges, uncover opportunities, and drive breakthroughs. Spotting hidden trends, connecting seemingly unrelated ideas, or interpreting massive amounts of data can be the difference between mediocrity and groundbreaking success.

My background in physics and quantitative finance has shown me how interconnected these fields are through their shared reliance on pattern recognition. For instance, financial models often pull principles from physics. The Black-Scholes option pricing model, a foundational financial tool, mirrors the heat equation in physics. Both describe how quantities change over time, whether it’s the spread of heat in a space or the fluctuation of an option’s price. This pattern-seeking, cross-disciplinary complexity is one of the reasons I find Graph Neural Networks (GNNs) so fascinating. GNNs go beyond making predictions by modeling and learning from the relationships within interconnected systems, uncovering patterns and dependencies that offer valuable insights into complex networks.

Reading Chaos by James Gleick further opened my eyes to the world of interconnected systems. Gleick’s exploration of chaos theory illuminated how small, seemingly insignificant actions can cascade into massive, unpredictable outcomes. This is where the concept of the “butterfly effect” comes in.

The Butterfly Effect | A Lens for Understanding GNNs

Imagine a butterfly flapping its delicate wings in Brazil. This flutter seems inconsequential—a small movement in the vastness of nature. Yet, this seemingly trivial action can set off a remarkable chain reaction. When the butterfly flaps its wings, it disturbs the surrounding air, creating a slight change in air pressure. This initial disruption ripples through the atmosphere, affecting the movement of nearby air particles, which shift and swirl to form micro-currents. These small air currents may not seem significant at first, but they can lead to larger air movements as they interact with other currents in the sky.

As these air particles continue to move, they collide with other air masses—some warm and moist, others cooler and drier. This interaction affects temperature and humidity, influencing cloud formation. Eventually, the butterfly’s initial flap contributes to a larger atmospheric system that could lead to significant weather changes, such as rainfall in a distant region. As these weather patterns evolve, they influence even larger systems, such as hurricanes. What was once a minor disturbance—a butterfly’s wing—can ultimately change the trajectory of a powerful storm, impacting thousands of lives.

GNNs remind me of this modern-day “butterfly effect.” Instead of predicting chaotic weather, they uncover the subtle relationships and interactions within networks of data. Imagine each data point—a customer, a molecule, or a social media post—as a node in an ever-evolving web. Like in chaos theory, a slight tweak in one node’s features, or “embedding,” can ripple out and transform the behavior of the entire network. By focusing on these relationships, GNNs create a map not just of data but of connections, showing how each part of a system influences the whole.

Tuning the embeddings in a GNN is like adjusting the “initial conditions” that chaos theory taught us are so vital. Adjusting these representations gives GNNs an edge, allowing each data point to communicate with others, predicting which customer might churn or which molecule might react in a specific way. It’s as if we’re playing with the “initial state” of a chaotic system, guiding it to reveal complex patterns that were otherwise hidden.

Building Blocks: Neural Networks and GNNs

To understand GNNs, let’s look at neural networks’ structure. A neural network is a pattern-recognizing machine, learning to identify features by analyzing data. At its core, a neural network consists of neurons—units that detect and respond to data features. Each neuron adjusts its responses by changing internal settings, or weights, allowing it to respond more accurately to specific patterns.

Neurons are organized in layers. Data passes through each layer, where neurons detect features of increasing complexity. For example, in an animal-recognition network:

- The first layer might detect edges or colors.

- Middle layers might detect shapes like eyes or paws.

- Final layers combine these shapes into a decision, like identifying a cat or a dog.

This learning process helps networks recognize features in structured data, like images or sequences, but it has limitations. Networks like Convolutional Neural Networks (CNNs) excel at image recognition, while Recurrent Neural Networks (RNNs) work well with sequences like text or music, where each part depends on the previous ones.

CNNs: Mastering Grid-Like Patterns in Images

CNNs are specialized for grid-like data, such as images, where each part has a fixed spatial relationship. When we look at an image, we recognize patterns like edges, shapes, or textures. CNNs detect these by examining small sections of an image using convolutional layers, which process patches of the image with filters to create a feature map showing where each pattern appears.

Pooling layers simplify this information, compressing sections of feature maps to keep essential patterns while reducing data complexity. This makes CNNs ideal for recognizing objects in different positions across an image.

RNNs: Capturing Context in Sequences

RNNs are better suited for sequences, like sentences or music, where each element depends on what came before. Imagine reading a sentence—each word relies on previous words to form context. RNNs process each element of a sequence, “remembering” past information through hidden states, which carry details from one step to the next.

This looping process allows RNNs to understand context, making them ideal for tasks like language translation, where capturing sequence dependencies is crucial.

GNNs

CNNs and RNNs have opened new possibilities, yet they’re limited by the structured data they require. Much real-world data exists in networks of relationships, like social networks, molecular structures, or transportation systems. These systems aren’t neat grids or sequences—they are graphs: webs of interconnected entities with relationships that overlap and vary.

To analyze these complex systems, we need a neural network that can handle data arranged as flexible networks rather than rigid patterns. This is where GNNs step in.

How GNNs Operate

- Initial Representation: Think of each node in a GNN as a person in a social network, each with a unique profile (a vector of initial features). These features could be simple, like “age” or “location,” or more complex, like transaction history.

- Message Passing: In a GNN, nodes don’t act alone. Each node communicates with its immediate neighbors, exchanging information. Imagine a social circle where each person shares their recent activities. Through this process, each node builds a richer understanding of its context.

- Aggregation and Update: After receiving messages from neighbors, nodes summarize this information (aggregation) and combine it with their original profile. For instance, if a friend in your network is a frequent shopper, this could influence your predicted shopping behaviors.

- Layer Stacking: GNNs stack multiple layers of message passing, allowing each node to learn from neighbors and “friends of friends.” This deepens the network’s understanding of broader patterns.

- Output Prediction: After several rounds of message passing and aggregation, each node holds a representation enriched by both its profile and connections, used to make predictions, such as identifying potential fraud or recommending a product.

Insights from the Fireside Chat - Hybrid Models and Applications

Hybrid models combining Graph Neural Networks (GNNs) and Knowledge Graphs represent a breakthrough in how we process and understand complex data. Together, they offer a unique synergy: GNNs excel at identifying intricate relationships within data, while Knowledge Graphs structure that data into contextual formats that are easy to interpret. This pairing is particularly impactful in industries like healthcare, finance, and customer service, where understanding nuanced relationships is critical.

Retrieval-Augmented Generation (RAG)

Retrieval-Augmented Generation (RAG) enhances AI accuracy by integrating two essential processes: retrieving relevant information from databases and using it to generate context-specific responses. This method is highly effective in customer support. By ensuring that the AI retrieves precise and contextually relevant data, RAG enables systems to provide answers that are both accurate and meaningful. This approach is invaluable for tasks where details and contextual understanding are paramount, such as legal or medical support systems.

Vectorization and Embeddings in Hybrid Models

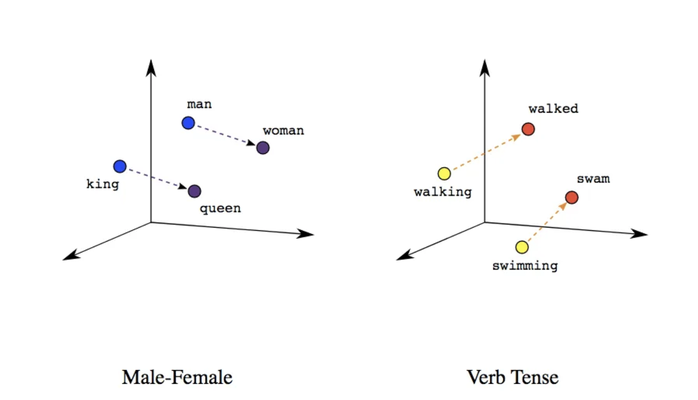

Hybrid models rely on two key techniques for understanding data: vectorization and embeddings.

- Vectorization converts raw data, such as text or images, into numerical arrays (vectors), allowing AI to detect patterns and relationships mathematically.

- Embeddings take this a step further by representing data points, such as words or customer behaviors, in a format that reflects their deeper similarities and relationships.

For instance, a hybrid model in customer service could map customer behaviors as vectors and use embeddings to identify patterns in purchasing habits, leading to precise predictions or personalized recommendations. These techniques allow hybrid models to process interconnected and dynamic datasets efficiently.

Hybrid AI models leverage the complementary strengths of GNNs and Knowledge Graphs to tackle data with complex, dynamic relationships. While GNNs excel at identifying patterns and connections in networked data, Knowledge Graphs organize that data into structured formats, adding layers of meaning and context. Imagine GNNs as a system that analyzes traffic flow, predicting congestion and movement patterns. Knowledge Graphs, on the other hand, are like an atlas that organizes information about roads, public transport, and landmarks, enabling city planners not only to analyze traffic but also to make informed decisions about infrastructure based on both real-time predictions and structured insights.

The practical applications of hybrid models span a range of industries, where they’re driving meaningful improvements in efficiency and accuracy. For example, in customer service, platforms like LinkedIn use hybrid models to enhance user support. Knowledge Graphs organize user data, making relevant information easy to retrieve, while GNNs analyze past interactions to predict and resolve potential issues. This allows customer service representatives to respond more accurately and efficiently, creating a more positive user experience. In healthcare, predictive analytics powered by hybrid models help providers anticipate patient needs. GNNs analyze patient history and treatment responses, while Knowledge Graphs provide context by linking medical conditions and protocols. This dual approach allows doctors to identify high-risk patients and implement preventive care, ultimately improving patient outcomes. Similarly, in finance, hybrid models are used to detect fraud. GNNs spot suspicious patterns in transaction networks, while Knowledge Graphs organize data by connecting accounts, transactions, and behavioral trends, enabling financial institutions to detect fraud faster and more effectively.

Scaling hybrid models presents unique challenges, as each new connection within a GNN or Knowledge Graph adds to the complexity, requiring significant computational resources. This is similar to expanding a city’s subway system; as new lines and stations are added, the infrastructure must adapt to increased capacity. However, advancements in computing power and memory-efficient algorithms are making it possible to implement hybrid models at an enterprise level, particularly in data-heavy sectors like finance and healthcare, where the ability to process large datasets opens new avenues for insight.

An important theme from the chat was the potential of hybrid models to promote explainability and transparency in AI. Knowledge Graphs inherently provide interpretable layers of information, while GNNs add insights into complex relationships. Together, they move AI away from being a “black box,” offering a clearer understanding of how models reach their predictions. This transparency is especially crucial in healthcare and finance, where trust in AI recommendations is essential. For instance, a healthcare provider using a hybrid model can see not only the predicted treatment plan but also the reasoning trail that led to the recommendation, making it easier to communicate with patients and build trust in AI-based decision-making.

Merging the analytical strengths of GNNs with the structured organization of Knowledge Graphs, these models offer a way forward for responsible and impactful AI. Industries can benefit from more accurate, transparent, and explainable applications that empower professionals to make data-informed, ethical decisions. This hybrid approach combines powerful insights with interpretability, setting a new standard for how AI can function as a trusted partner in decision-making.